TEACHER EDUCATION Capacitación docente Lehrerausbildung ÉDUCATION DES ENSEIGNANTS LEHRER AUSBILDUNG EDUCAÇÃO DO PROFESSOR ОБУЧЕНИЕ УЧИТЕЛЯ 教师教育 تعليم المدرس آموزش معلم शिक्षक की शिक्षा ٹیچر کی تعلیم শিক্ষক শিক্ষা

Friday 21 September 2018

Thursday 13 September 2018

Norm-referenced and Criterion referenced. Norm-referenced testing

There are two types of standardized tests: Norm-referenced and Criterion referenced. Norm-referenced testing measures performance relative to all other students taking the same test. It lets you know how well a student did compare to the rest of the testing population. For example, if a student is ranked in the 86th percentile, that means he/she did better than 86 percent of others who took the test. This type of testing is the most common found among standardized testing. Criterion referenced testing measures factual knowledge of a defined body of material. Multiple-choice tests that people take to get their license or a test in fractions are both examples of this type of testing. In addition to the two main categories of standardized tests, these tests can be divided even further into performance tests or aptitude tests. Performance tests are assessments of what learning has already occurred in a particular subject area, while aptitude tests are assessments of abilities or skills considered important to future success in school. Intelligence tests are also standardized tests that aim to determine how a person can handle problem solving using higher level cognitive thinking. Often just called an IQ test for common use, a typical IQ test asks problems involving pattern recognition and logical reasoning. It then takes into account the time needed and how many questions the person completes correctly, with penalties for guessing. Specific tests and how the results are used change from district to district but intelligence testing is common during the early years of schooling.

(b) Advantages

• It can be obtained easily and available on researcher’s convenience.

• It can be adopted and implemented quickly.

• It reduces or eliminates faculty time demands in instrument development and grading.

• It helps to score objectively.

• It can provide the external validity of test.

• It helps to provide reference group measures.

• It can make longitudinal comparisons.

• It can test large numbers of students.

(c) Disadvantages

• It measures relatively superficial knowledge or learning.

• Norm-referenced data may be less useful than criterion-referenced.

• It may be cost prohibitive to administer as a pre- and post-test.

• It is more summative than formative (may be difficult to isolate what changes are needed).

• It may be difficult to receive results in a timely manner.

(b) Advantages

• It can be obtained easily and available on researcher’s convenience.

• It can be adopted and implemented quickly.

• It reduces or eliminates faculty time demands in instrument development and grading.

• It helps to score objectively.

• It can provide the external validity of test.

• It helps to provide reference group measures.

• It can make longitudinal comparisons.

• It can test large numbers of students.

(c) Disadvantages

• It measures relatively superficial knowledge or learning.

• Norm-referenced data may be less useful than criterion-referenced.

• It may be cost prohibitive to administer as a pre- and post-test.

• It is more summative than formative (may be difficult to isolate what changes are needed).

• It may be difficult to receive results in a timely manner.

Types advantages and dis advantages of Rating Scales

Numerical Rating Scales:

A sequence of numbers is assigned to descriptive Categories; the rater marks a number to indicate the degree to which a characteristic is present

Graphic Rating Scales:

A set of categories described at certain points along the line of a continuum; the rater can mark his or her judgment at any location on the line

Advantages of Rating Scales: Used for behaviours not easily measured by other means Quick and easy to complete User can apply knowledge about the child from other times Minimum of training required Easy to design using consistent descriptors (e.g., always, sometimes, rarely, or never) Can describe the child’s steps toward understanding or mastery

(b) Disadvantages

Highly subjective (rater error and bias are a common problem).

Raters may rate a child on the basis of their previous interactions or on an emotional, rather than an objective, basis.

Ambiguous terms make them unreliable: raters are likely to mark characteristics by using different interpretations of the ratings (e.g., do they all agree on what “sometimes” means?).

A sequence of numbers is assigned to descriptive Categories; the rater marks a number to indicate the degree to which a characteristic is present

Graphic Rating Scales:

A set of categories described at certain points along the line of a continuum; the rater can mark his or her judgment at any location on the line

Advantages of Rating Scales: Used for behaviours not easily measured by other means Quick and easy to complete User can apply knowledge about the child from other times Minimum of training required Easy to design using consistent descriptors (e.g., always, sometimes, rarely, or never) Can describe the child’s steps toward understanding or mastery

(b) Disadvantages

Highly subjective (rater error and bias are a common problem).

Raters may rate a child on the basis of their previous interactions or on an emotional, rather than an objective, basis.

Ambiguous terms make them unreliable: raters are likely to mark characteristics by using different interpretations of the ratings (e.g., do they all agree on what “sometimes” means?).

what is Rating Scale?

A rating scale is a tool used for assessing the performance of tasks, skill levels, procedures, processes, qualities, quantities, or end products, such as reports, drawings, and computer programs. These are judged at a defined level within a stated range. Rating scales are similar to checklists except that they indicate the degree of accomplishment rather than just yes or no. Hence rating scale used to determine the degree to which the child exhibits a behaviour or the quality of that behavior; each trait is rated on a continuum, the observer decides where the child fits on the scale overall rating scale focuses on:

• Make a qualitative judgment about the extent to which a behavior is present

• Consist of a set of characteristics or qualities to be judged by using a systematic procedure

• Numerical and graphic rating scales are used most frequently

What are the Advantages and disadvantages of Interview?

Very good technique for getting the information about the complex, emotionally laden subjects. Can be easily adapted to the ability of the person being interviewed. Yields a good percentage of returns.

Yields perfect sample of the general population.

Data collected by this method is likely to be more correct as compared to the other methods that are used to investigate issues in an in depth way for the data collection

Discover how individuals think and feel about a topic and why they hold certain opinions

Investigate the use, effectiveness and usefulness of particular library collections and services

Inform decision making, strategic planning and resource allocation

Sensitive topics which people may feel uncomfortable discussing in a focus group

Add a human dimension to impersonal data

Deepen understanding and explain statistical data.

Disadvantages of interview

Time consuming process.

Involves high cost.

Requires highly skilled interviewer.

Requires more energy.

May sometimes involve systematic errors.

More confusing and a very complicated method.

Different interviewers may understand and transcribe interviews in different ways.

Yields perfect sample of the general population.

Data collected by this method is likely to be more correct as compared to the other methods that are used to investigate issues in an in depth way for the data collection

Discover how individuals think and feel about a topic and why they hold certain opinions

Investigate the use, effectiveness and usefulness of particular library collections and services

Inform decision making, strategic planning and resource allocation

Sensitive topics which people may feel uncomfortable discussing in a focus group

Add a human dimension to impersonal data

Deepen understanding and explain statistical data.

Disadvantages of interview

Time consuming process.

Involves high cost.

Requires highly skilled interviewer.

Requires more energy.

May sometimes involve systematic errors.

More confusing and a very complicated method.

Different interviewers may understand and transcribe interviews in different ways.

What are the Types of Interview?

1. Structured Interview

Here, every single detail of the interview is decided in advance. The questions to be asked, the order in which the questions will be asked, the time given to each candidate, the information to be collected from each candidate, etc. is all decided in advance. Structured interview is also called Standardized, Patterned, Directed or Guided interview. Structured interviews are preplanned. They are accurate and precise. All the interviews will be uniform (same). Therefore, there will be consistency and minimum bias in structured interviews.

2. Unstructured Interview

This interview is not planned in detail. Hence it is also called as Non-Directed interview. The question to be asked, the information to be collected from the candidates, etc. are not decided in advance. These interviews are non-planned and therefore, more flexible. Candidates are more relaxed in such interviews. They are encouraged to express themselves about different subjects, based on their expectations, motivations, background, interests, etc. Here the interviewer can make a better judgment of the candidate's personality, potentials, strengths and weaknesses. However, if the interviewer is not efficient then the discussions will lose direction and the interview will be a waste of time and effort.

3. Group Interview

Here, all the candidates or small groups of candidates are interviewed together. The time of the interviewer is saved. A group interview is similar to a group discussion. A topic is given to the group, and they are asked to discuss it. The interviewer carefully watches the candidates. He tries to find out which candidate influences others, who clarifies issues, who summarizes the discussion, who speaks effectively, etc. He tries to judge the behaviour of each candidate in a group situation.

4. Exit Interview

When an employee leaves the company, he is interviewed either by his immediate superior or by the Human Resource Development (HRD) manager. This interview is called an exit interview. Exit interview is taken to find out why the employee is leaving the company. Sometimes, the employee may be asked to withdraw his resignation by providing some incentives. Exit interviews are taken to create a good image of the company in the minds of the employees who are leaving the company. They help the company to make proper Human Resource Development (HRD) policies, to create a favourable work environment, to create employee loyalty and to reduce labour turnover.

5. Depth Interview

This is a semi-structured interview. The candidate has to give detailed information about his background, special interest, etc. He also has to give detailed information about his subject. Depth interview tries to find out if the candidate is an expert in his subject or not. Here, the interviewer must have a good understanding of human behaviour.

6. Stress Interview

The purpose of this interview is to find out how the candidate behaves in a stressful situation. That is, whether the candidate gets angry or gets confused or gets frightened or gets nervous or remains cool in a stressful situation. The candidate who keeps his cool in a stressful situation is selected for the stressful job. Here, the interviewer tries to create a stressful situation during the interview. This is done purposely by asking the candidate rapid questions, criticizing his answers, interrupting him repeatedly, etc. Then the behviour of the interviewee is observed and future educational planning based on his/her stress levels and handling of stress.

7. Individual Interview

This is a 'One-To-One' Interview. It is a verbal and visual interaction between two people, the interviewer and the candidate, for a particular purpose. The purpose of this interview is to match the candidate with the job. It is a two way communication.

8. Informal Interview

Informal interview is an oral interview which can be arranged at any place. Different questions are asked to collect the required information from the candidate. Specific rigid procedure is not followed. It is a friendly interview.

10. Panel Interview

Panel means a selection committee or interview committee that is appointed for interviewing the candidates. The panel may include three or five members. They ask questions to the candidates about different aspects. They give marks to each candidate. The final decision will be taken by all members collectively by rating the candidates. Panel interview is always better than an interview by one interviewer because in a panel interview, collective judgment is used for selecting suitable candidates.

11. Behavioral Interview In a behavioural interview, the interviewer will ask you questions based on common situations of the job you are applying for. The logic behind the behavioral interview is that your future performance will be based on a past performance of a similar situation. You should expect questions that inquire about what you did when you were in some situation and how did you dealt with it. In a behavioral interview, the interviewer wants to see how you deal with certain problems and what you do to solve them.

12. Phone Interview A phone interview may be for a position where the candidate is not local or for an initial prescreening call to see if they want to invite you in for an in-person interview. You may be asked typical questions or behavioural questions. Most of the time you will schedule an appointment for a phone interview. If the interviewer calls unexpectedly, it's ok to ask them politely to schedule an appointment. On a phone interview, make sure your call waiting is turned off, you are in a quiet room, and you are not eating, drinking or chewing gum.

9. Formal Interview

Formal interview is held in a more formal atmosphere. The interviewer asks pre-planned questions. Formal interview is also called planned interview.

Here, every single detail of the interview is decided in advance. The questions to be asked, the order in which the questions will be asked, the time given to each candidate, the information to be collected from each candidate, etc. is all decided in advance. Structured interview is also called Standardized, Patterned, Directed or Guided interview. Structured interviews are preplanned. They are accurate and precise. All the interviews will be uniform (same). Therefore, there will be consistency and minimum bias in structured interviews.

2. Unstructured Interview

This interview is not planned in detail. Hence it is also called as Non-Directed interview. The question to be asked, the information to be collected from the candidates, etc. are not decided in advance. These interviews are non-planned and therefore, more flexible. Candidates are more relaxed in such interviews. They are encouraged to express themselves about different subjects, based on their expectations, motivations, background, interests, etc. Here the interviewer can make a better judgment of the candidate's personality, potentials, strengths and weaknesses. However, if the interviewer is not efficient then the discussions will lose direction and the interview will be a waste of time and effort.

3. Group Interview

Here, all the candidates or small groups of candidates are interviewed together. The time of the interviewer is saved. A group interview is similar to a group discussion. A topic is given to the group, and they are asked to discuss it. The interviewer carefully watches the candidates. He tries to find out which candidate influences others, who clarifies issues, who summarizes the discussion, who speaks effectively, etc. He tries to judge the behaviour of each candidate in a group situation.

4. Exit Interview

When an employee leaves the company, he is interviewed either by his immediate superior or by the Human Resource Development (HRD) manager. This interview is called an exit interview. Exit interview is taken to find out why the employee is leaving the company. Sometimes, the employee may be asked to withdraw his resignation by providing some incentives. Exit interviews are taken to create a good image of the company in the minds of the employees who are leaving the company. They help the company to make proper Human Resource Development (HRD) policies, to create a favourable work environment, to create employee loyalty and to reduce labour turnover.

5. Depth Interview

This is a semi-structured interview. The candidate has to give detailed information about his background, special interest, etc. He also has to give detailed information about his subject. Depth interview tries to find out if the candidate is an expert in his subject or not. Here, the interviewer must have a good understanding of human behaviour.

6. Stress Interview

The purpose of this interview is to find out how the candidate behaves in a stressful situation. That is, whether the candidate gets angry or gets confused or gets frightened or gets nervous or remains cool in a stressful situation. The candidate who keeps his cool in a stressful situation is selected for the stressful job. Here, the interviewer tries to create a stressful situation during the interview. This is done purposely by asking the candidate rapid questions, criticizing his answers, interrupting him repeatedly, etc. Then the behviour of the interviewee is observed and future educational planning based on his/her stress levels and handling of stress.

7. Individual Interview

This is a 'One-To-One' Interview. It is a verbal and visual interaction between two people, the interviewer and the candidate, for a particular purpose. The purpose of this interview is to match the candidate with the job. It is a two way communication.

8. Informal Interview

Informal interview is an oral interview which can be arranged at any place. Different questions are asked to collect the required information from the candidate. Specific rigid procedure is not followed. It is a friendly interview.

10. Panel Interview

Panel means a selection committee or interview committee that is appointed for interviewing the candidates. The panel may include three or five members. They ask questions to the candidates about different aspects. They give marks to each candidate. The final decision will be taken by all members collectively by rating the candidates. Panel interview is always better than an interview by one interviewer because in a panel interview, collective judgment is used for selecting suitable candidates.

11. Behavioral Interview In a behavioural interview, the interviewer will ask you questions based on common situations of the job you are applying for. The logic behind the behavioral interview is that your future performance will be based on a past performance of a similar situation. You should expect questions that inquire about what you did when you were in some situation and how did you dealt with it. In a behavioral interview, the interviewer wants to see how you deal with certain problems and what you do to solve them.

12. Phone Interview A phone interview may be for a position where the candidate is not local or for an initial prescreening call to see if they want to invite you in for an in-person interview. You may be asked typical questions or behavioural questions. Most of the time you will schedule an appointment for a phone interview. If the interviewer calls unexpectedly, it's ok to ask them politely to schedule an appointment. On a phone interview, make sure your call waiting is turned off, you are in a quiet room, and you are not eating, drinking or chewing gum.

9. Formal Interview

Formal interview is held in a more formal atmosphere. The interviewer asks pre-planned questions. Formal interview is also called planned interview.

Tuesday 11 September 2018

Relationship between Validity and Reliability of a test,

Reliability and validity are two different standards used to gauge the usefulness of a test. Though different, they work together. It would not be beneficial to design a test with good reliability that did not measure what it was intended to measure. The inverse, accurately measuring what we desire to measure with a test that is so flawed that results are not reproducible, is impossible. Reliability is a necessary requirement for validity. This means that you have to have good reliability in order to have validity. Reliability actually puts a cap or limit on validity, and if a test is not reliable, it cannot be valid. Establishing good reliability is only the first part of establishing validity. Validity has to be established separately. Having good reliability does not mean we have good validity, it just means we are measuring something consistently. Now we must establish, what it is that we are measuring consistently. The main point here is reliability is necessary but not sufficient for validity. In short we can say that reliability means noting when the problem is validity.

Factors Affecting Validity of a test.

Validity evidence is an important aspect to consider while thinking of the classroom testing and measurement. There are many factors that tend to make test result invalid for their intended use. A little careful effort by the test developer help to control these factors, but some of them need systematic approach. No teacher would think of measuring knowledge of social studies with an English test. Nor would a teacher consider measuring problem-solving skills in third-grade arithmetic with a test designed for sixth grades. In both instances, the test results would obviously be invalid. The factors influencing validity are of this same general but match more subtle in character. For example, a teacher may overload a social studies test with items concerning historical facts, and thus the scores are less valid as a measure of achievement in social studies. Or a third–grade teacher may select appropriate arithmetic problems for a test but use vocabulary in the problems and directions that only the better readers are able to understand. The arithmetic test then becomes, in part, reading test, which invalidates the result for their intended use. These examples show some of the more subtle factors influencing validity, for which the teacher should be alert, whether constructing classroom tests or selecting published tests. Some other factors that may affect the test validity are discussed as under.

1. Instructions to Take A Test:

The instructions with the test should be clear and understandable and it should be in simple language. Unclear instructions may restrict the pupil how to respond to the items, whether it is permissible to guess, and how to record the answers will tend to reduce validity.

2. Difficult Language Structure:

Language of the test or instructions to the test that is too complicated for the pupils taking the test will result in the test’s measuring reading comprehension and aspects of intelligence, which will distort the meaning of the test results. Therefore it should be simple considering the grade for which the test is meant.

3. Inappropriate Level of Difficulty:

norm-references tests, items that are too easy or too difficult will not provide reliable discriminations among pupils and will therefore lower validity. In criterion-referenced tests, the failure to match the difficulty specified by the learning outcome will lower validity.

4. Poorly Constructed Test Items:

There may be some items that provide direction to the answer or test items that unintentionally provide alertness in detecting clues are poor items, these items may harm the validity of the test.

5. Ambiguity in Items Statements:

Ambiguous statements in test items contribute to misinterpretations and confusion. Ambiguity sometimes confuses the better pupils more than it does the poor pupils, causing the items to discriminate in a negative direction.

6. Length of the Test:

A test is only a Sample of the many questions that might be asked. If a test is too short to provide a representative sample of the performance we are interested in, its validity will suffer accordingly. Similarly a too lengthy test is also a threat to the validity evidence of the test.

7. Improper Arrangement of Items:

Test items are typically arranged in order of difficulty, with the easiest items first. Placing difficult items early in the test may cause pupils to spend too much time on these and prevent them from reaching items they could easily answer. Improper arrangement may also influence validity by having a detrimental effect on pupil motivation. The influence is likely to be strongest with young pupils.

8. Identifiable Pattern of Answers:

Placing correct answers in some systematic pattern will enable pupils to guess the answers to some items more easily, and this will lower validity.

In short, any defect in the tests construction that prevents the test items from functioning as intended will invalidate the interpretations to be drawn from the results. There may be many other factors that can also affect the validity of the test to some extents. Some of these factors are listed as under.

Inadequate sample

Inappropriate selection of constructs or measures.

Items that do not function as intended

Improper administration: inadequate time allowed, poorly controlled conditions

Scoring that is subjective

Insufficient data collected to make valid conclusions.

Too great a variation in data (can't see the wood for the trees).

Inadequate selection of target subjects.

Complex interaction across constructs.

Subjects giving biased answers or trying to guess what they should say.

1. Instructions to Take A Test:

The instructions with the test should be clear and understandable and it should be in simple language. Unclear instructions may restrict the pupil how to respond to the items, whether it is permissible to guess, and how to record the answers will tend to reduce validity.

2. Difficult Language Structure:

Language of the test or instructions to the test that is too complicated for the pupils taking the test will result in the test’s measuring reading comprehension and aspects of intelligence, which will distort the meaning of the test results. Therefore it should be simple considering the grade for which the test is meant.

3. Inappropriate Level of Difficulty:

norm-references tests, items that are too easy or too difficult will not provide reliable discriminations among pupils and will therefore lower validity. In criterion-referenced tests, the failure to match the difficulty specified by the learning outcome will lower validity.

4. Poorly Constructed Test Items:

There may be some items that provide direction to the answer or test items that unintentionally provide alertness in detecting clues are poor items, these items may harm the validity of the test.

5. Ambiguity in Items Statements:

Ambiguous statements in test items contribute to misinterpretations and confusion. Ambiguity sometimes confuses the better pupils more than it does the poor pupils, causing the items to discriminate in a negative direction.

6. Length of the Test:

A test is only a Sample of the many questions that might be asked. If a test is too short to provide a representative sample of the performance we are interested in, its validity will suffer accordingly. Similarly a too lengthy test is also a threat to the validity evidence of the test.

7. Improper Arrangement of Items:

Test items are typically arranged in order of difficulty, with the easiest items first. Placing difficult items early in the test may cause pupils to spend too much time on these and prevent them from reaching items they could easily answer. Improper arrangement may also influence validity by having a detrimental effect on pupil motivation. The influence is likely to be strongest with young pupils.

8. Identifiable Pattern of Answers:

Placing correct answers in some systematic pattern will enable pupils to guess the answers to some items more easily, and this will lower validity.

In short, any defect in the tests construction that prevents the test items from functioning as intended will invalidate the interpretations to be drawn from the results. There may be many other factors that can also affect the validity of the test to some extents. Some of these factors are listed as under.

Inadequate sample

Inappropriate selection of constructs or measures.

Items that do not function as intended

Improper administration: inadequate time allowed, poorly controlled conditions

Scoring that is subjective

Insufficient data collected to make valid conclusions.

Too great a variation in data (can't see the wood for the trees).

Inadequate selection of target subjects.

Complex interaction across constructs.

Subjects giving biased answers or trying to guess what they should say.

VALIDITY OF THE ASSESSMENT TOOLS

Nature of Validity

The validity of an assessment tool is the degree to which it measures for what it is designed to measure. For example if a test is designed to measure the skill of addition of three digit in mathematics but the problems are presented in difficult language that is not according to the ability level of the students then it may not measure the addition skill of three digits, consequently will not be a valid test. Many experts of measurement had defined this term, some of the definitions are given as under.

According to Business Dictionary the “Validity is the degree to which an instrument, selection process, statistical technique, or test measures what it is supposed to measure.”

Cook and Campbell (1979) define validity as the appropriateness or correctness of inferences, decisions, or descriptions made about individuals, groups, or institutions from test results.

According to APA (American Psychological association) standards document the validity is the most important consideration in test evaluation. The concept refers to the appropriateness, meaningfulness, and usefulness of the specific inferences made from test scores. Test validation is the process of accumulating evidence to support such inferences. Validity, however, is a unitary concept. Although evidence may be accumulated in many ways, validity always refers to the degree to which that evidence supports the inferences that are made from the scores. The inferences regarding specific uses of a test are validated, not the test itself.

Howell’s (1992) view of validity of the test is; a valid test must measure specifically what it is intended to measure.

According to Messick the validity is a matter of degree, not absolutely valid or absolutely invalid. He advocates that, over time, validity evidence will continue to gather, either enhancing or contradicting previous findings. Overall we can say that in terms of assessment, validity refers to the extent to which a test's content is representative of the actual skills learned and whether the test can allow accurate conclusions concerning achievement. Therefore validity is the extent to which a test measures what it claims to measure. It is vital for a test to be valid in order for the results to be accurately applied and interpreted. Let’s consider the following examples.

Examples:

1. Say you are assigned to observe the effect of strict attendance policies on class participation. After observing two or three weeks you reported that class participation did increase after the policy was established.

2. Say you are intended to measure the intelligence and if math and vocabulary truly represent intelligence then a math and vocabulary test might be said to have high validity when used as a measure of intelligence.

A test has validity evidence, if we can demonstrate that it measures what it says to measure. For instance, if it is supposed to be a test for fifth grade arithmetic ability, it should measure fifth grade arithmetic ability and not the reading ability.

Test Validity and Test Validation

Tests can take the form of written responses to a series of questions, such as the paper-and-pencil tests, or of judgments by experts about behaviour in the classroom/school, or for a work performance appraisal. The form of written test results also vary from pass/fail, to holistic judgments, to a complex series of numbers meant to convey minute differences in behaviour.

Regardless of the form a test takes, its most important aspect is how the results are used and the way those results impact individual persons and society as a whole. Tests used for admission to schools or programs or for educational diagnosis not only affect individuals, but also assign value to the content being tested. A test that is perfectly appropriate and useful in one situation may be inappropriate or insufficient in another. For example, a test that may be sufficient for use in educational diagnosis may be completely insufficient for use in determining graduation from high school.

Test validity, or the validation of a test, explicitly means validating the use of a test in a specific context, such as college admission or placement into a course. Therefore, when determining the validity of a test, it is important to study the test results in the setting in which they are used. In the previous example, in order to use the same test for educational diagnosis as for high school graduation, each use would need to be validated separately, even though the same test is used for both purposes.

Purpose of Measuring Validity

Most, but not all, tests are designed to measure skills, abilities, or traits that are and are not directly observable. For example, scores on the Scholastic Aptitude Test (SAT) measure developed critical reading, writing and mathematical ability. The score on the SAT that an examinee obtains when he/she takes the test is not a direct measure of critical reading ability, such as degrees centigrade is a direct measure of the heat of an object. The amount of an examinee's developed critical reading ability must be inferred from the examinee's SAT critical reading score.

The process of using a test score as a sample of behaviour in order to draw conclusions about a larger domain of behaviours is characteristic of most educational and psychological tests. Responsible test developers and publishers must be able to demonstrate that it is possible to use the sample of behaviours measured by a test to make valid inferences about an examinee's ability to perform tasks that represent the larger domain of interest.

Validity versus Reliability

A test can be reliable but may not be valid. If test scores are to be used to make accurate inferences about an examinee's ability, they must be both reliable and valid. Reliability is a prerequisite for validity and refers to the ability of a test to measure a particular trait or skill consistently. In simple words we can say that same test administered to same students may yield same score. However, tests can be highly reliable and still not be valid for a particular purpose. Consider the example of a thermometer if there is a systematic error and it measures five degrees higher. When the repeated readings has been taken under the same conditions the thermometer will yield consistent (reliable) measurements, but the inference about the temperature is faulty.

This analogy makes it clear that determining the reliability of a test is an important first step, but not the defining step, in determining the validity of a test.

Methods of Measuring Validity

Validity is the appropriateness of a particular uses of the test scores, test validation is then the process of collecting evidence to justify the intended use of the scores. In order to collect the evidence of validity there are many types of validity methods that provide usefulness of the assessment tools. Some of them are listed below.

Content Validity

The evidence of the content validity is judgmental process and may be formal or informal. The formal process has systematic procedure which arrives at a judgment. The important components are the identification of behavioural objectives and construction of table of specification. Content validity evidence involves the degree to which the content of the test matches a content domain associated with the construct. For example, a test of the ability to add two numbers, should include a range of combinations of digits. A test with only one-digit numbers, or only even numbers, would not have good coverage of the content domain. Content related evidence typically involves Subject Matter Experts (SME's) evaluating test items against the test specifications.

It is a non-statistical type of validity that involves “the systematic examination of the test content to determine whether it covers a representative sample of the behaviour domain to be measured” (Anastasi & Urbina, 1997). For example, does an IQ questionnaire have items covering all areas of intelligence discussed in the scientific literature?

A test has content validity built into it by careful selection of which items to include (Anastasi & Urbina, 1997). Items are chosen so that they comply with the test specification which is drawn up through a thorough examination of the subject domain. Foxcraft et al. (2004, p. 49) note that by using a panel of experts to review the test specifications and the selection of items the content validity of a test can be improved. The experts will be able to review the items and comment on whether the items cover a representative sample of the behaviour domain.

For Example - In developing a teaching competency test, experts on the field of teacher training would identify the information and issues required to be an effective teacher and then will choose (or rate) items that represent those areas of information and skills which are expected from a teacher to exhibit in classroom.

Lawshe (1975) proposed that each rater should respond to the following question for each item in content validity:

Is the skill or knowledge measured by this item?

Essential

Useful but not essential

Not necessary

With respect to educational achievement tests, a test is considered content valid when the proportion of the material covered in the test approximates the proportion of material covered in the course.

There are different types of content validity; the major types face validity and the curricular validity are as below.

1 Face Validity

Face validity is an estimate of whether a test appears to measure a certain criterion; it does not guarantee that the test actually measures phenomena in that domain. Face validity is very closely related to content validity. While content validity depends on a theoretical basis for assuming if a test is assessing all domains of a certain criterion (e.g. does assessing addition skills yield in a good measure for mathematical skills? - To answer this you have to know, what different kinds of arithmetic skills mathematical skills include ) face validity relates to whether a test appears to be a good measure or not. This judgment is made on the "face" of the test, thus it can also be judged by the amateur.

Face validity is a starting point, but should NEVER be assumed to be provably valid for any given purpose, as the "experts" may be wrong.

For example- suppose you were taking an instrument reportedly measuring your attractiveness, but the questions were asking you to identify the correctly spelled word in each list. Not much of a link between the claim of what it is supposed to do and what it actually does.Possible Advantage of Face Validity...

• If the respondent knows what information we are looking for, they can use that “context” to help interpret the questions and provide more useful, accurate answers.

Possible Disadvantage of Face Validity...

• If the respondent knows what information we are looking for, they might try to “bend & shape” their answers to what they think we want 2. Curricular Validity

The extent to which the content of the test matches the objectives of a specific curriculum as it is formally described. Curricular validity takes on particular importance in situations where tests are used for high-stakes decisions, such as Punjab Examination Commission exams for fifth and eight grade students and Boards of Intermediate and Secondary Education Examinations. In these situations, curricular validity means that the content of a test that is used to make a decision about whether a student should be promoted to the next levels should measure the curriculum that the student is taught in schools.

Curricular validity is evaluated by groups of curriculum/content experts. The experts are asked to judge whether the content of the test is parallel to the curriculum objectives and whether the test and curricular emphases are in proper balance. Table of specification may help to improve the validity of the test.

Construct Validity

Before defining the construct validity, it seems necessary to elaborate the concept of construct. It is the concept or the characteristic that a test is designed to measure. A construct provides the target that a particular assessment or set of assessments is designed to measure; it is a separate entity from the test itself. According to Howell (1992) Construct validity is a test’s ability to measure factors which are relevant to the field of study. Construct validity is thus an assessment of the quality of an instrument or experimental design. It says 'Does it measure the construct it is supposed to measure'. Construct validity is rarely applied in achievement test.

Construct validity refers to the extent to which operationalizations of a construct (e.g. practical tests developed from a theory) do actually measure what the theory says they do. For example, to what extent is an IQ questionnaire actually measuring "intelligence"? Construct validity evidence involves the empirical and theoretical support for the interpretation of the construct. Such lines of evidence include statistical analyses of the internal structure of the test including the relationships between responses to different test items. They also include relationships between the test and measures of other constructs. As currently understood, construct validity is not distinct from the support for the substantive theory of the construct that the test is designed to measure. As such, experiments designed to reveal aspects of the causal role of the construct also contribute to construct validity evidence.

Construct validity occurs when the theoretical constructs of cause and effect accurately represent the real-world situations they are intended to model. This is related to how well the experiment is operationalized. A good experiment turns the theory (constructs) into actual things you can measure. Sometimes just finding out more about the construct (which itself must be valid) can be helpful. The construct validity addresses the construct that are mapped into the test items, it is also assured either by judgmental method or by developing the test specification before the development of the test. The constructs have some essential properties the two of them are listed as under:

1. Are abstract summaries of some regularity in nature?

2. Related with concrete, observable entities.

For Example - Integrity is a construct; it cannot be directly observed, yet it is useful for understanding, describing, and predicting human behaviour.

1. Convergent Validity

Convergent validity refers to the degree to which a measure is correlated with other measures that it is theoretically predicted to correlate with. OR

Convergent validity occurs where measures of constructs that are expected to correlate do so. This is similar to concurrent validity (which looks for correlation with other tests).

For example, if scores on a specific mathematics test are similar to students scores on other mathematics tests, then convergent validity is high (there is a positively correlation between the scores from similar tests of mathematics).

2. Discriminant Validity

Discriminant validity describes the degree to which the operationalization does not correlate with other operationalizations that it theoretically should not be correlated with. OR

Discriminant validity occurs where constructs that are expected not to relate with each other, such that it is possible to discriminate between these constructs. For example, if discriminant validity is high, scores on a test designed to assess students skills in mathematics should not be positively correlated with scores from tests designed to assess intelligence.

Criterion Validity

Criterion validity evidence involves the correlation between the test and a criterion variable (or variables) taken as representative of the construct. In other words, it compares the test with other measures or outcomes (the criteria) already held to be valid. For example, employee selection tests are often validated against measures of job performance (the criterion), and IQ tests are often validated against measures of academic performance (the criterion).

If the test data and criterion data are collected at the same time, this is referred to as concurrent validity evidence. If the test data is collected first in order to predict criterion data collected at a later point in time, then this is referred to as predictive validity evidence.

For example, the company psychologist would measure the job performance of the new artists after they have been on-the-job for 6 months. He or she would then correlate scores on each predictor with job performance scores to determine which one is the best predictor.

Concurrent Validity

According to Howell (1992) “concurrent validity is determined using other existing and similar tests which have been known to be valid as comparisons to a test being developed. There is no other known valid test to measure the range of cultural issues tested for this specific group of subjects”.

Concurrent validity refers to the degree to which the scores taken at one point correlates with other measures (test, observation or interview) of the same construct that is measured at the same time. Returning to the selection test example, this would mean that the tests are administered to current employees and then correlated with their scores on performance reviews. This measure the relationship between measures made with existing tests. The existing test is thus the criterion. For example, a measure of creativity should correlate with existing measures of creativity.

For example:

To assess the validity of a diagnostic screening test. In this case the predictor (X) is the test and the criterion (Y) is the clinical diagnosis. When the correlation is large this means that the predictor is useful as a diagnostic tool.

Predictive Validity

Predictive validity assures how well the test predicts some future behaviour of the examinee. It validity refers to the degree to which the operationalization can predict (or correlate with) other measures of the same construct that are measured at some time in the future. Again, with the selection test example, this would mean that the tests are administered to applicants, all applicants are hired, their performance is reviewed at a later time, and then their scores on the two measures are correlated. This form of the validity evidence is particularly useful and important for the aptitude tests, which attempt to predict how well the test taker will do in some future setting.

This measures the extent to which a future level of a variable can be predicted from a current measurement. This includes correlation with measurements made with different instruments. For example, a political poll intends to measure future voting intent. College entry tests should have a high predictive validity with regard to final exam results. When the two sets of scores are correlated, the coefficient that results is called the predictive validity coefficient.

Examples:

1. If higher scores on the Boards Exams are positively correlated with higher G.P.A.’s in the Universities and vice versa, then the Board exams is said to have predictive validity.

2. We might theorize that a measure of math ability should be able to predict how well a person will do in an engineering-based profession.

The predictive validity depends upon the following two steps.

Obtain test scores from a group of respondents, but do not use the test in making a decision.

At some later time, obtain a performance measure for those respondents, and correlate these measures with test scores to obtain predictive validity.

The validity of an assessment tool is the degree to which it measures for what it is designed to measure. For example if a test is designed to measure the skill of addition of three digit in mathematics but the problems are presented in difficult language that is not according to the ability level of the students then it may not measure the addition skill of three digits, consequently will not be a valid test. Many experts of measurement had defined this term, some of the definitions are given as under.

According to Business Dictionary the “Validity is the degree to which an instrument, selection process, statistical technique, or test measures what it is supposed to measure.”

Cook and Campbell (1979) define validity as the appropriateness or correctness of inferences, decisions, or descriptions made about individuals, groups, or institutions from test results.

According to APA (American Psychological association) standards document the validity is the most important consideration in test evaluation. The concept refers to the appropriateness, meaningfulness, and usefulness of the specific inferences made from test scores. Test validation is the process of accumulating evidence to support such inferences. Validity, however, is a unitary concept. Although evidence may be accumulated in many ways, validity always refers to the degree to which that evidence supports the inferences that are made from the scores. The inferences regarding specific uses of a test are validated, not the test itself.

Howell’s (1992) view of validity of the test is; a valid test must measure specifically what it is intended to measure.

According to Messick the validity is a matter of degree, not absolutely valid or absolutely invalid. He advocates that, over time, validity evidence will continue to gather, either enhancing or contradicting previous findings. Overall we can say that in terms of assessment, validity refers to the extent to which a test's content is representative of the actual skills learned and whether the test can allow accurate conclusions concerning achievement. Therefore validity is the extent to which a test measures what it claims to measure. It is vital for a test to be valid in order for the results to be accurately applied and interpreted. Let’s consider the following examples.

Examples:

1. Say you are assigned to observe the effect of strict attendance policies on class participation. After observing two or three weeks you reported that class participation did increase after the policy was established.

2. Say you are intended to measure the intelligence and if math and vocabulary truly represent intelligence then a math and vocabulary test might be said to have high validity when used as a measure of intelligence.

A test has validity evidence, if we can demonstrate that it measures what it says to measure. For instance, if it is supposed to be a test for fifth grade arithmetic ability, it should measure fifth grade arithmetic ability and not the reading ability.

Test Validity and Test Validation

Tests can take the form of written responses to a series of questions, such as the paper-and-pencil tests, or of judgments by experts about behaviour in the classroom/school, or for a work performance appraisal. The form of written test results also vary from pass/fail, to holistic judgments, to a complex series of numbers meant to convey minute differences in behaviour.

Regardless of the form a test takes, its most important aspect is how the results are used and the way those results impact individual persons and society as a whole. Tests used for admission to schools or programs or for educational diagnosis not only affect individuals, but also assign value to the content being tested. A test that is perfectly appropriate and useful in one situation may be inappropriate or insufficient in another. For example, a test that may be sufficient for use in educational diagnosis may be completely insufficient for use in determining graduation from high school.

Test validity, or the validation of a test, explicitly means validating the use of a test in a specific context, such as college admission or placement into a course. Therefore, when determining the validity of a test, it is important to study the test results in the setting in which they are used. In the previous example, in order to use the same test for educational diagnosis as for high school graduation, each use would need to be validated separately, even though the same test is used for both purposes.

Purpose of Measuring Validity

Most, but not all, tests are designed to measure skills, abilities, or traits that are and are not directly observable. For example, scores on the Scholastic Aptitude Test (SAT) measure developed critical reading, writing and mathematical ability. The score on the SAT that an examinee obtains when he/she takes the test is not a direct measure of critical reading ability, such as degrees centigrade is a direct measure of the heat of an object. The amount of an examinee's developed critical reading ability must be inferred from the examinee's SAT critical reading score.

The process of using a test score as a sample of behaviour in order to draw conclusions about a larger domain of behaviours is characteristic of most educational and psychological tests. Responsible test developers and publishers must be able to demonstrate that it is possible to use the sample of behaviours measured by a test to make valid inferences about an examinee's ability to perform tasks that represent the larger domain of interest.

Validity versus Reliability

A test can be reliable but may not be valid. If test scores are to be used to make accurate inferences about an examinee's ability, they must be both reliable and valid. Reliability is a prerequisite for validity and refers to the ability of a test to measure a particular trait or skill consistently. In simple words we can say that same test administered to same students may yield same score. However, tests can be highly reliable and still not be valid for a particular purpose. Consider the example of a thermometer if there is a systematic error and it measures five degrees higher. When the repeated readings has been taken under the same conditions the thermometer will yield consistent (reliable) measurements, but the inference about the temperature is faulty.

This analogy makes it clear that determining the reliability of a test is an important first step, but not the defining step, in determining the validity of a test.

Methods of Measuring Validity

Validity is the appropriateness of a particular uses of the test scores, test validation is then the process of collecting evidence to justify the intended use of the scores. In order to collect the evidence of validity there are many types of validity methods that provide usefulness of the assessment tools. Some of them are listed below.

Content Validity

The evidence of the content validity is judgmental process and may be formal or informal. The formal process has systematic procedure which arrives at a judgment. The important components are the identification of behavioural objectives and construction of table of specification. Content validity evidence involves the degree to which the content of the test matches a content domain associated with the construct. For example, a test of the ability to add two numbers, should include a range of combinations of digits. A test with only one-digit numbers, or only even numbers, would not have good coverage of the content domain. Content related evidence typically involves Subject Matter Experts (SME's) evaluating test items against the test specifications.

It is a non-statistical type of validity that involves “the systematic examination of the test content to determine whether it covers a representative sample of the behaviour domain to be measured” (Anastasi & Urbina, 1997). For example, does an IQ questionnaire have items covering all areas of intelligence discussed in the scientific literature?

A test has content validity built into it by careful selection of which items to include (Anastasi & Urbina, 1997). Items are chosen so that they comply with the test specification which is drawn up through a thorough examination of the subject domain. Foxcraft et al. (2004, p. 49) note that by using a panel of experts to review the test specifications and the selection of items the content validity of a test can be improved. The experts will be able to review the items and comment on whether the items cover a representative sample of the behaviour domain.

For Example - In developing a teaching competency test, experts on the field of teacher training would identify the information and issues required to be an effective teacher and then will choose (or rate) items that represent those areas of information and skills which are expected from a teacher to exhibit in classroom.

Lawshe (1975) proposed that each rater should respond to the following question for each item in content validity:

Is the skill or knowledge measured by this item?

Essential

Useful but not essential

Not necessary

With respect to educational achievement tests, a test is considered content valid when the proportion of the material covered in the test approximates the proportion of material covered in the course.

There are different types of content validity; the major types face validity and the curricular validity are as below.

1 Face Validity

Face validity is an estimate of whether a test appears to measure a certain criterion; it does not guarantee that the test actually measures phenomena in that domain. Face validity is very closely related to content validity. While content validity depends on a theoretical basis for assuming if a test is assessing all domains of a certain criterion (e.g. does assessing addition skills yield in a good measure for mathematical skills? - To answer this you have to know, what different kinds of arithmetic skills mathematical skills include ) face validity relates to whether a test appears to be a good measure or not. This judgment is made on the "face" of the test, thus it can also be judged by the amateur.

Face validity is a starting point, but should NEVER be assumed to be provably valid for any given purpose, as the "experts" may be wrong.

For example- suppose you were taking an instrument reportedly measuring your attractiveness, but the questions were asking you to identify the correctly spelled word in each list. Not much of a link between the claim of what it is supposed to do and what it actually does.Possible Advantage of Face Validity...

• If the respondent knows what information we are looking for, they can use that “context” to help interpret the questions and provide more useful, accurate answers.

Possible Disadvantage of Face Validity...

• If the respondent knows what information we are looking for, they might try to “bend & shape” their answers to what they think we want 2. Curricular Validity

The extent to which the content of the test matches the objectives of a specific curriculum as it is formally described. Curricular validity takes on particular importance in situations where tests are used for high-stakes decisions, such as Punjab Examination Commission exams for fifth and eight grade students and Boards of Intermediate and Secondary Education Examinations. In these situations, curricular validity means that the content of a test that is used to make a decision about whether a student should be promoted to the next levels should measure the curriculum that the student is taught in schools.

Curricular validity is evaluated by groups of curriculum/content experts. The experts are asked to judge whether the content of the test is parallel to the curriculum objectives and whether the test and curricular emphases are in proper balance. Table of specification may help to improve the validity of the test.

Construct Validity

Before defining the construct validity, it seems necessary to elaborate the concept of construct. It is the concept or the characteristic that a test is designed to measure. A construct provides the target that a particular assessment or set of assessments is designed to measure; it is a separate entity from the test itself. According to Howell (1992) Construct validity is a test’s ability to measure factors which are relevant to the field of study. Construct validity is thus an assessment of the quality of an instrument or experimental design. It says 'Does it measure the construct it is supposed to measure'. Construct validity is rarely applied in achievement test.

Construct validity refers to the extent to which operationalizations of a construct (e.g. practical tests developed from a theory) do actually measure what the theory says they do. For example, to what extent is an IQ questionnaire actually measuring "intelligence"? Construct validity evidence involves the empirical and theoretical support for the interpretation of the construct. Such lines of evidence include statistical analyses of the internal structure of the test including the relationships between responses to different test items. They also include relationships between the test and measures of other constructs. As currently understood, construct validity is not distinct from the support for the substantive theory of the construct that the test is designed to measure. As such, experiments designed to reveal aspects of the causal role of the construct also contribute to construct validity evidence.

Construct validity occurs when the theoretical constructs of cause and effect accurately represent the real-world situations they are intended to model. This is related to how well the experiment is operationalized. A good experiment turns the theory (constructs) into actual things you can measure. Sometimes just finding out more about the construct (which itself must be valid) can be helpful. The construct validity addresses the construct that are mapped into the test items, it is also assured either by judgmental method or by developing the test specification before the development of the test. The constructs have some essential properties the two of them are listed as under:

1. Are abstract summaries of some regularity in nature?

2. Related with concrete, observable entities.

For Example - Integrity is a construct; it cannot be directly observed, yet it is useful for understanding, describing, and predicting human behaviour.

1. Convergent Validity

Convergent validity refers to the degree to which a measure is correlated with other measures that it is theoretically predicted to correlate with. OR

Convergent validity occurs where measures of constructs that are expected to correlate do so. This is similar to concurrent validity (which looks for correlation with other tests).

For example, if scores on a specific mathematics test are similar to students scores on other mathematics tests, then convergent validity is high (there is a positively correlation between the scores from similar tests of mathematics).

2. Discriminant Validity

Discriminant validity describes the degree to which the operationalization does not correlate with other operationalizations that it theoretically should not be correlated with. OR

Discriminant validity occurs where constructs that are expected not to relate with each other, such that it is possible to discriminate between these constructs. For example, if discriminant validity is high, scores on a test designed to assess students skills in mathematics should not be positively correlated with scores from tests designed to assess intelligence.

Criterion Validity

Criterion validity evidence involves the correlation between the test and a criterion variable (or variables) taken as representative of the construct. In other words, it compares the test with other measures or outcomes (the criteria) already held to be valid. For example, employee selection tests are often validated against measures of job performance (the criterion), and IQ tests are often validated against measures of academic performance (the criterion).

If the test data and criterion data are collected at the same time, this is referred to as concurrent validity evidence. If the test data is collected first in order to predict criterion data collected at a later point in time, then this is referred to as predictive validity evidence.

For example, the company psychologist would measure the job performance of the new artists after they have been on-the-job for 6 months. He or she would then correlate scores on each predictor with job performance scores to determine which one is the best predictor.

Concurrent Validity

According to Howell (1992) “concurrent validity is determined using other existing and similar tests which have been known to be valid as comparisons to a test being developed. There is no other known valid test to measure the range of cultural issues tested for this specific group of subjects”.

Concurrent validity refers to the degree to which the scores taken at one point correlates with other measures (test, observation or interview) of the same construct that is measured at the same time. Returning to the selection test example, this would mean that the tests are administered to current employees and then correlated with their scores on performance reviews. This measure the relationship between measures made with existing tests. The existing test is thus the criterion. For example, a measure of creativity should correlate with existing measures of creativity.

For example:

To assess the validity of a diagnostic screening test. In this case the predictor (X) is the test and the criterion (Y) is the clinical diagnosis. When the correlation is large this means that the predictor is useful as a diagnostic tool.

Predictive Validity

Predictive validity assures how well the test predicts some future behaviour of the examinee. It validity refers to the degree to which the operationalization can predict (or correlate with) other measures of the same construct that are measured at some time in the future. Again, with the selection test example, this would mean that the tests are administered to applicants, all applicants are hired, their performance is reviewed at a later time, and then their scores on the two measures are correlated. This form of the validity evidence is particularly useful and important for the aptitude tests, which attempt to predict how well the test taker will do in some future setting.

This measures the extent to which a future level of a variable can be predicted from a current measurement. This includes correlation with measurements made with different instruments. For example, a political poll intends to measure future voting intent. College entry tests should have a high predictive validity with regard to final exam results. When the two sets of scores are correlated, the coefficient that results is called the predictive validity coefficient.

Examples:

1. If higher scores on the Boards Exams are positively correlated with higher G.P.A.’s in the Universities and vice versa, then the Board exams is said to have predictive validity.

2. We might theorize that a measure of math ability should be able to predict how well a person will do in an engineering-based profession.

The predictive validity depends upon the following two steps.

Obtain test scores from a group of respondents, but do not use the test in making a decision.

At some later time, obtain a performance measure for those respondents, and correlate these measures with test scores to obtain predictive validity.

Scoring the Test

Scoring Objective Test Items

If the student’s answers are recorded on the test paper itself, a scoring key can be made by marking the correct answers on a blank copy of the test. Scoring then is simply a matter of comparing the columns of the answers on this master copy with the columns of answers on each student’s paper. A strip key which consists merely of strips of paper, on which the columns of answers are recorded, may also be used if more convenient. These can easily be prepared by cutting the columns of answers from the master copy of the test and mounting them on strips of cardboard cut from manila folders.

When separate answer sheets are used, a scoring stencil is more convenient. This is a blank answer sheet with holes punched where the correct answers should appear. The stencil is laid over the answer sheet, and the number of the answer checks appearing through holes is counted. When this type of scoring procedure is used, each test paper should also be scanned to make certain that only one answer was marked for each item. Any item containing more than one answer should be eliminated from the scoring.

As each test paper is scored, mark each item that is scored incorrectly. With multiple choice items, a good practice is to draw a red line through the correct answers of the missed items rather than through the student’s wrong answers. This will indicate to the students those items missed and at the same time will indicate the correct answers. Time will be saved and confusion avoided during discussion of the test. Marking the correct answers of the missed items is simple with a scoring stencil. When no answer check appears through a hole in the stencil, a red line is drawn across the hole.

In scoring objective tests, each correct answer is usually counted as one point, because an arbitrary weighting of items make little difference in the students’ final scores. If some items are counted two points, some one point, and some half point, the scoring will be more complicated without any accompanying benefits. Scores based on such weightings will be similar to the simpler procedure of counting each item on one point. When a test consists of a combination of objective items and a few, more time-consuming, essay questions, however, more than a single point is needed to distinguish several levels of response and to reflect disproportionate time devoted to each of the essay questions.

When students are told to answer every item on the test, a student’s score is simply the number of items answered correctly. There is no need to consider wrong answers or to correct for guessing. When all students answer every item on the test, the rank of the students’ scores will be same whether the number is right or a correction for guessing is used.

A simplified form of item analysis is all that is necessary or warranted for classroom tests because most classroom groups consist of 20 to 40 students, an especially useful procedure to compare the responses of the ten lowest-scoring students. As we shall see later, keeping the upper and lower groups and ten students each simplifies the interpretation of the results. It also is a reasonable number for analysis in groups of 20 to 40 students. For example, with a small classroom group, like that of 20 students, it is best to use the upper and lower halves to obtain dependable data, whereas with a larger group, like that of 40 students, use of upper and lower 25 percent is quite satisfactory. For more refined analysis, the upper and lower 27 percent is often recommended, and most statistical guides are based on that percentage.

To illustrate the method of item analysis, suppose we have just finished scoring 32 test papers for a sixth-grade science unit on weather. Our item analysis might then proceed as follows:

1. ... Rank the 32 test papers in order from the highest to the lowest score.

2. ... Select the 10 papers within the highest total scores and the ten papers with the lowest total scores.

3. ... Put aside the middle 12 papers as they will not be used in the analysis.

4. ... For each test item, tabulate the number of students in the upper and lower groups who selected each alternative. This tabulation can be made directly on the test paper or on the test item card.

5. ... Compute the difficulty of each item (percentage of the students who got the item right).

6. ... Compute the discriminating power of each item (difference between the number of students in the upper and lower groups who got the item right).

7. ... Evaluate the effectiveness of distracters in each item (attractiveness of the incorrect alternatives).

Although item analysis by inspection will reveal the general effectiveness of a test item and is satisfactory for most classroom purposes, it is sometimes useful to obtain a more precise estimate of item difficulty and discriminating power. This can be done by applying relatively simple formulas to the item-analysis data.

Computing item difficulty:

The difficulty of a test item is indicated by the percentage of students who get the item right. Hence, we can compute item difficulty (P) by means of following formula, in which R equals the number of students who got the item right, and T equals the total number of students who tried the item.

P=(R/T)x 100

The discriminating power of an achievement test items refers to the degree to which it discriminates between students with high and low achievements. Item discriminating power (D) can be obtained by subtracting the number of students in the lower group who get the item right (RL) from the number of students in the upper group who get the item right (RU) and dividing by one-half the total number of students included in the item analysis (.5T). Summarized in formula form, it is:

D= (RU-RL)/.5T

An item with maximum positive discriminating power is one in which all students in the upper group get the item right and all the students in the lower group get the item wrong. This results in an index of 1.00, as follows:

D= (10-0)/10=1.00

An item with no discriminating power is one in which an equal number of students in both the upper and lower groups get the item right. This results in an index of .00, as follows:

D= (10-10)/10= .00

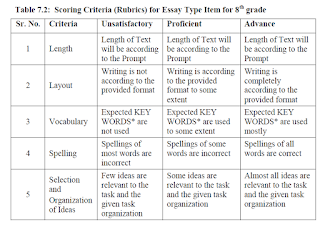

Scoring Essay Type Test Items

According to N.E. Gronlund (1990) the chief weakness of the essay test is the difficulty of scoring. The objectivity of scoring the essay questions may be improved by following a few rules developed by test experts.

a. Prepare a scoring key in advance. The scoring key should include the major points of the acceptable answer, the feature of the answer to be evaluated, and the weights assigned to each. To illustrate, suppose the question is “Describe the main elements of teaching.” Suppose also that this question carries 20 marks. We can prepare a scoring key for the question as follows.

i. Outline of the acceptable answer. There are four elements in teaching these are: the definition of instructional objectives, the identification of the entering behaviour of students, the provision of the learning experiences, and the assessment of the students’ performance.

ii. Main features of the answer and the weights assigned to each.

- Content: Allow 4 points to each elements of teaching.

- Comprehensiveness: Allow 2 points.

- Logical organization: Allow 2 points.

- Irrelevant material: Deduct upto a maximum of 2 points.

- Misspelling of technical terms: Deduct 1/2 point for each mistake upto a maximum of 2 points.

- Major grammatical mistakes: Deduct 1 point for each mistake upto a maximum of 2 points.

- Poor handwriting, misspelling of non-technical terms and minor grammatical errors: ignore.

Preparing the scoring key in advance is useful since it provides a uniform standard for evaluation.

b. Use an appropriate scoring method. There are two scoring methods commonly used by the classroom teacher. The point method and the rating method.

In the point method, the teacher compares each answer with the acceptable answer and assigns a given number of points in terms of how will each answer approximates the acceptable answer. This method is suitable in a restricted response type of question since in this type each feature of the answer can be identified and given proper point values. For example: Suppose that the question is: “List five hypotheses that might explain why nations go to wars.” In the question, we can easily assign a number of point values to each hypothesis and evaluate each answer accordingly.